Nanotechnology’s influence in our daily life is reflected in mobile communication tools, medical diagnostics and new treatments, the use of data by companies and governments, and its accumulation in the cloud. Society reacts slowly to rapidly unfolding technological changes. Nanotechnology, with its atomic-scale capabilities in which much of the natural and physical world’s dynamics unfold, has the potential to make far more dramatic leaps than humanity has encountered in the past. Evolutionary changes—the natural world’s manipulation, such as through genes—and emergent changes—the physical world’s manipulation and autonomy, such as through artificial intelligence—can now be brought together to cause profound existential changes. The complex existential machines thus created have a ghost in them, and humanity needs to shape technology at each stage of the machine building so that it is an angelic ghost.

Even some of your materialistic countrymen are prepared to accept—at least as a working hypothesis—that some entity has—well, invaded Hal. Sasha has dug up a good phrase: “The Ghost in the Machine.”

Arthur C. Clarke (in 2010: Odyssey Two, 1982)

The official doctrine, which hails chiefly from Descartes, is something like this. … every human being has both a body and a mind. Some would prefer to say that every human being is both a body and a mind. His body and his mind are ordinarily harnessed together, but after the death of the body his mind may continue to exist and function. … Such in outline is the official theory. I shall often speak of it, with deliberate abusiveness, as ‘the dogma of the Ghost in the Machine.’ I hope to prove that it is entirely false, and false not in detail but in principle.

Gilbert Ryle (in The Concept of Mind, 1949)

Six years ago, in an earlier article1 in this book series, we explored the implications of the ability to observe and exercise control at the atomic and molecular scale, also known as nanotechnology. We had concluded with speculations and numerous questions regarding the physical and biological complexity that the future will unfold as a result of this technology’s development. Our reasoning was that nature’s complexity arose in the interactions that take place at the atomic scale—atoms building molecules, complex molecules in turn leading to factories such as from the cells for reproduction, and the creation of multifaceted hierarchical systems. Physical laws, such as the second law of thermodynamics, still hold good, so this development of complexity takes place over long time scales in highly energy-efficient systems. The use of nanotechnology’s atomic-scale control—nature’s scale—has brought continuing improvements in efficiency in physical systems and a broadening of its utilization in biology and elsewhere. This ability to take over nature’s control by physical technology gives humans the ability to intervene beneficially, but also to raise existential questions about man and machine. Illustrative examples were emergent machines as self-replicating automatons where hardware and software have fused as in living systems, or evolution machines where optimization practiced by engineering modifies the evolutionary construct. Computational machines now exist as the emergent variety which improve themselves as they observe and manipulate more data, retune themselves by changing how the hardware is utilized, and span copies of themselves through partitioning over existent hardware, even if they are not yet building themselves physically except in rudimentary 3D printing. Numerous chemicals and drugs are now built via cells and enzymes as the biological factories.

The existential questions that we concluded with in the article have only buttressed themselves in this interregnum. Rapid development of CRISPR (clustered regularly interspaced short palindromic repeats) and of machine learning evolving to artificial intelligence (AI)2 have brought us to a delicate point.

This paper—scientific and philosophical musing—underscores lessons from these intervening years to emphasize the rapid progress made, and then turns to what this portends. We look back at how the physical principles have influenced nanotechnology’s progress and evolution, where it has rapidly succeeded and where not, and for what reasons. As new technologies appear and cause rapid change, society’s institutions and us individually are slow in responding toward accentuating the positive and suppressing the negatives through the restraints—community-based and personal—that bring a healthy balance. Steam engines, when invented, were prone to explosions. Trains had accidents due to absent signaling systems. Society put together mechanisms for safety and security. Engines still explode, accidents still happen, but at a level that society has deemed acceptable. We are still working on the control of plastics, and failing at global warming. These latter reflect the long latency of community and personal response.

In the spirit of the past essay, this paper is a reflection on the evolution of the nanotechnology catalyzed capabilities that appear to be around the corner, as well as the profound questions that society needs to start reflecting and acting on.

The use of nanotechnology’s atomic-scale control—nature’s scale—has brought continuing improvements in efficiency in physical systems and a broadening of its utilization in biology and elsewhere. This ability to take over nature’s control by physical technology gives humans the ability to intervene beneficially, but also to raise existential questions about man and machine

This past decadal period has brought numerous passive applications of nanotechnology into general use. As a material drawing on the variety of unique properties that can be achieved in specific materials through small-scale phenomena, usage of nanotechnology is increasingly pervasive in numerous products, though still at a relatively high fiscal cost. These applications range from those that are relatively simple to others that are quite complex. Coatings give strength and high corrosion and wear resistance. Medical implants—stents, valves, pacemakers, others—employ such coatings, as do surfaces that require increased resistance in adverse environments: from machine tools to large-area metal surfaces. Since a material’s small size changes optical properties, trivial but widespread uses, such as sunscreens, or much more sophisticated uses, such as optically mediated interventions in living and physical systems, have become possible. The mechanical enhancements deriving from nanotubes have become part of the arsenal of lightweight composites. The nanoscale size and surface’s usage has also made improved filtration possible for viruses and bacteria. Batteries employ the porosity and surface properties for improvements in utilitarian characteristics—energy density, charge retention, and so on—and the proliferation of electric cars and battery-based storage promises large-scale utilization. Surfaces make it possible to take advantage of molecular-scale interaction for binding and interaction. This same mechanism also allows targeting with specificity in the body for both enhanced detection as well as drug delivery. So, observation, in particular, of cancerous growths and their elimination has become an increasing part of the medical tool chest. This same surface-centric property makes sensors that can detect specific chemicals in the environment possible. The evolutionary machine theme’s major role has consisted in its ability, through large-scale, nature-mimicking random trials conducted in a laboratory-on-a-chip, to sort, understand, and then develop a set of remedies that can be delivered to the target where biological mechanisms have gone awry. Cancer, the truly challenging frontier of medicine, has benefited tremendously through the observation and intervention provided by nanotechnology’s tool chest even though this truly complex behavior (a set of many different diseases) is still very far from any solution except in a few of its forms. The evolutionary machine theme is also evident in the production methods used for a variety of simple-to-complex compounds—from the strong oxidant that is hydrogen peroxide via enzymes to the vaccine for Ebola virus produced via tobacco plants—that have become commonplace.

Admittedly, much of this nanotechnology usage has been in places where cost has been of secondary concern, and the specific property attribute of sufficient appeal to make a product attractive. This points to at least one barrier, that of the cost of manufacturing that has remained a challenge. Another has been the traditional problem of over-ebullience that drives market culture. Graphene, nanotubes, and other such forms are still in search of large-scale usage. An example of market culture is the space elevator based on carbon nanotubes that caught popular attention. Thermodynamics dictates the probabilities of errors—defects, for example—in assemblies. And although a nanotube, when small, can exhibit enormous strength, once one makes it long enough, even the existence of one defect has serious repercussions. So, space elevators remain science fiction.

The evolutionary machine theme’s major role has consisted in its ability to sort, understand, and then develop a set of remedies that can be delivered to the target where biological mechanisms have gone awry

There is one place, though, where the cost barrier continues to be overcome at quite an incredible rate. In human-centric applications, the evolution of electronics in the information industry (computation and communication) has seen the most dramatic cost reduction and expansion of widespread usage. The cellphone, now an Internet-accessing and video delivering smartphone, has been an incredibly beneficial transforming utility for the poor in the Third World.

The nature of archiving and accessing data has changed through the nanoscale memories and the nanoscale computational resources that increasingly exist in a far removed “non-place”—euphemistically in the cloud. These are accessed again by a nanoscale-enabled plethora of developments in communications, whether wireless or optical, and through the devices that we employ. Videos, texting, short bursts of communications, and rapid dissemination of information is ubiquitous. Appliances, large and small, are being connected together and controlled as an Internet of Things in a widening fabric at home and at work for desired goals such as reducing energy consumption, or improving health, or for taking routine tasks away from human beings.

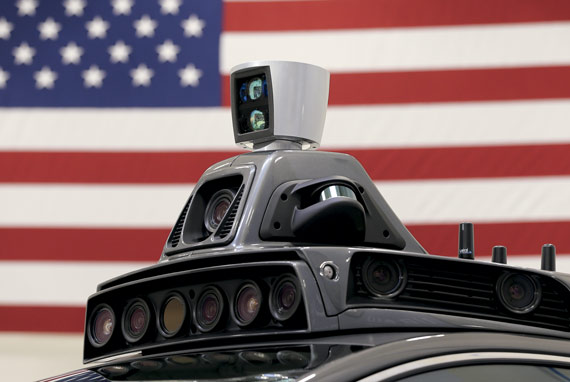

Availability of information and the ability to draw inferences from it has brought machine learning, also known as artificial intelligence (AI), to the fore. Data can be analyzed and decisions made autonomously in a rudimentary form in the emergent machine sense. Artificial intelligence coupled to robotics—the physical use of this information—is also slowly moving from factories to human-centric applications, such as autonomous driving, in which sensing, inferencing, controlling, and operating all come together. This is all active usage that has an emergent and evolutionary character.

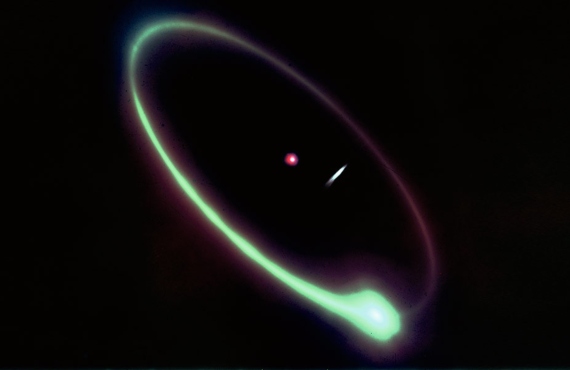

Quantum computation is another area that has made tremendous progress during this period. Quantum computing employs entangled superposition of information accessible at the quantum scale, which becomes possible at the nanoscale, in order to proceed with the computation. It is a very different style than the traditional one of deterministic computing, in which bits are classical. Classical in the sense that they are either, say, “0” or “1” Boolean bits. We can transform them through computational functions, a “0” to “1”, for example by an inverter, or a collection of these—one number—and another collection of these—another number—through a desired function to another collection of these, which is another number. Adding or multiplying is such a functional operation. At each stage of computation, these “0”s and “1”s are being transformed deterministically. In classical computation, one cannot, in general, get back to the starting point once one has performed transformations since information is being discarded along the way. A multiplication product usually has multiple combinations of multiplicands and multipliers. Quantum bits as entangled superposed states are very different. A single quantum bit system is a superposition of “0” and “1”. We do not know which one it is except that it is one of them. When we measure it, we find out whether it is a “0” or a “1”. A two quantum bit system can be an entangled system where the two bits are interlinked. For example, it could be a superposition where if the first is a “0” the second is a “1”, and if the first is a “1” then the second is a “0”. This is an entangled superposition. Only when we make a measurement does one find out if it is the “01” combination or the “10” combination. A system composed of a large number of quantum bits can hold in it far more of these linkages, and one can manipulate these through the computation—without the measurement of the result—while the entanglement and its transformations continue to exist. While performing this computation, without having observed the result, one can actually go back to the starting point since no information has been discarded. So, the computation proceeds and only when we make a measurement, do we find out the result, which is in our classical world. It is this ability, and transformations in it while still keeping the entanglement and its related possibility options open—unlike the discarding of them in the classical mode—that endows quantum computing with properties that surpass those of classical computation. Fifty quantum bit systems exist now. This is almost the point in which quantum computation begins to achieve capabilities superior to that of classical computation. Numerous difficult problems, cryptography having been the earliest rationale for pursuing this approach, but also many more practical interesting problems—understanding molecules and molecular interactions, working toward further complexity of them including into drug discovery—become potentially solvable. Fifty quantum bits make much more complexity to be computed. Since nature is quantum in its behavior at the smallest scales where the diversity of interactions occurs, and classical behavior is a correspondence outcome, that is, a highly likely statistical outcome, quantum computing represents a way that we have now found to simulate how nature itself computes. A new style of computing is being born.

Artificial intelligence coupled to robotics—the physical use of this information—is also slowly moving from factories to human-centric applications, such as autonomous driving, in which sensing, inferencing, controlling, and operating all come together

These broad and pervasive changes—still in infancy—are very much on the same scale of changes that arose with the invention of the printing press and the invention of mechanized transportation. The press democratized learning and information delivery. Mechanized transportation made the world smaller and eased the delivery of goods. Both were instrumental in making a more efficient way—in time, but also in energy, and in other dimensions—for human uplifting possible. Books also deliver vile and transportation is a convenient tool for terrorists and government crackdowns. Society has found ways to limit these negative effects, and is finding more ways to limit as new mutations crop up. Deleterious attributes are also observable in the new technologies. The mobile communication instrumentation and ubiquitous information availability has changed us. It takes us away from longer range careful thinking and articulating, as well as how we react to any information thanks to the trust that the written word has instilled in us. Financial markets, social interactions, and even our family interactions show consequences of this access ubiquity that has arisen from the nanoscale. Drones as no-blood-on-my-hand machines of death are now ubiquitous in conflicts trivializing death and human destiny exploiting the technology.

Quantum computation is an area that has made tremendous progress over the last decade. Numerous difficult problems, cryptography having been the earliest rationale for pursuing this approach, but also many more practical interesting problems—understanding molecules and molecular interactions, working toward further complexity of them including into drug discovery—become potentially solvable

This evolution in artificial intelligence, robotics, autonomy, the merging of emergent and evolutionary machinery is raising profound questions and society is struggling to grasp even a rudimentary understanding of them so that it can rationally tackle from both a philosophical and a scientific perspective many of the problems that will most certainly arise, just as they did with earlier moments of technology-seeded inflection.

I would like to explore this segue by looking into the coming era’s possibilities and integrating thoughts that encompass where this comes from and where it could lead to by looking at some unifying thoughts across the physical and natural world integrated by nanotechnology.

The second law of thermodynamics, first formulated by Sadi Carnot in 1824, says that in an isolated system entropy always increases. Entropy, in a simplistic sense, is a measure of randomness. The law says that if there is absent movement of energy and matter in a system, the system will evolve toward complete randomness. It will exhaust itself of any capability. A thermodynamic equilibrium is this state of maximum randomness. Initially empirical, it now has concrete statistical foundations, and is the reason why natural processes tend to go in one direction, as also for the arrow of time. In contrast, our world—natural and what we have created—has a lot of organization together with a capability to do really interesting things. Atoms assemble themselves into molecules. Molecules become complex. Very complex molecules such as the ribosomes—consisting of RNA and protein parts—become a controller and perform transcription and messenger functions essential to creating proteins. Cells appear—thousands in variety in the human—organs form, and grow to end up in the diversity of nature’s creations. A living emergent machine appeared through a hierarchy of this organized coupling. Our social system, computing or governmental or finance systems, are also such a machinery: parts coupling to other parts under an organized orthodoxy that has numerous capabilities. The second law’s vector toward thermodynamic randomness has been overcome due to flow of matter and energy and an interesting diversity has come about.

This is the appearance of complexity taking the form of hierarchy for which Herbert Simon has an interesting parable in the essay “The architecture of complexity.”3 It involves Hora and Tempus, the watchmakers. Both make watches with a thousand little individual parts. Tempus made his watches by putting all the parts together in one go, but if interrupted, for example, by the phone, he had to reassemble it from all these one thousand parts. The more the customers liked the watch, the more they called, the more Tempus fell behind. Hora’s watches were also just as good. But he made them using a hierarchy of subassemblies. The first group of subassemblies used ten parts each. Then ten such subassemblies were used to build a bigger subassembly, and so on. Proud competitors in the beginning, Tempus ended up working for Hora. If there is a one-in-a-hundred chance of being interrupted during the assembling process, Tempus, on average, had to spend four thousand times more time than Hora to assemble a watch. He had to start all over again from the beginning. Hora had to do this only part of the way. Hierarchy made Hora’s success at this rudimentary complex system possible.

Thermodynamics’ lesson in this parable, as also in a generalization to our physical and natural world, is that the complexity arose from the assembling of parts which in general may be random. The likelihood of building up from a small number of elements coming together to obtain a stable assembly, initially simple, but progressively more complex with the building up of hierarchy, is larger than for the coming together of a large number of elements. And new properties emerged in the end watch and possibly in the intermediate subassemblies. Of course, this is a very simplistic description subject to many objections, but an organizational structure appeared due to the flow of energy and parts into the system and the existence of stable intermediate forms that had a lowering of entropy. If there is enough time, nature too will build hierarchies based on stable intermediate forms that it discovers. By doing so, it acquires negentropy (a lowering—negative—of entropy from its state of exhaustion, that is, the maximum). This is the story of the appearance of life.

Our social system, computing or governmental or finance systems, are also such a machinery: parts coupling to other parts under an organized orthodoxy that has numerous capabilities

In his 1967 book The Ghost in the Machine,4—the third time that this phrase has appeared for us—Arthur Koestler calls this process the “Janus effect.” Nodes of this hierarchy are like the Roman god, whose one face is toward the dependent part and the other toward the apex. This link and proliferation of these links with their unusual properties are crucial to the emergent properties of the whole.

Thermodynamics, however, places additional constraints and these arise in the confluence of energy, entropy, and errors. Any complex system consists of a large number of hierarchical subassemblies. A system using nanoscale objects is subject to these constraints of the building process. If errors are to be reduced, the reduction of entropy must be large. But, such a reduction process requires the use of more energy. Heat will arise in this process. To be sustainable—avoiding overheating, even as energy flow keeps the system working—requires high-energy efficiency, limits to the amount of energy transformed, and keeping a lid on errors. Having to deploy so much energy at each step makes the system enormously energy hungry. The computation and communication disciplines suffer from this thermodynamic consequence. The giant turbines that convert energies to electric form—the mechanical motion of the blades to the flow of current across voltages—need to be incredibly efficient so that only a few percent—if that—of that energy is lost to the volume. And a turbine is not really that complex a system. Computation and communication have not yet learned this lesson.

Nature has found its own clever way around this problem. Consider the complex biological machine that is the human. The building or replacement processes occur individually in very small volumes—nanoscale—and are happening in parallel at Avogadro number scale. All this transcription, messaging, protein and cell generation, and so on, requires energy and nature works around the error problem by introducing mechanisms for self-repair. Very low energy (10–100 kBT, the kBT being a good measure of the order of energy in a thermal motion) breaks and forms these bonds. Errors scale exponentially with these pre-factors. At 10 kBT energy, one in 100,000 building steps, for example, for each unzipping and copying step, will have an error, so errors must be detected, the building machine must step back to the previous known good state and rebuild that bond, a bit like that phone call to Tempus and Hora, but causing Hora to restart only from an intermediate state. Nature manages to do this pretty well. The human body recycles a body weight of ATP (adenosine triphosphate)—the molecule for energy transformation—every day so that chemical synthesis, nerve impulse propagation, and muscle contraction can happen. Checking and repairing made this complexity under energy constraint possible.

What would take nature’s complex system many many generations, such as through plant breeding, or of living species, can be achieved in one or few generations. An emergent-evolution machine has been born with human intervention. But now it can live on its own. New members of the natural kingdom can be engineered

How is all this discussion related to the subject of nanotechnology with its masquerading ghost?

I would like to illustrate this by bringing together two technologies of this decade: that of CRISPR and the rise of artificial intelligence.

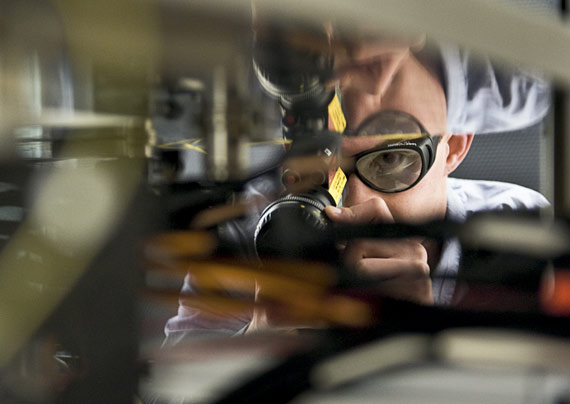

CRISPR is a gene-editing method that uses a protein (Cas9, a naturally occurring enzyme) and specific guide RNAs to disrupt host genes and to insert sequences of interest. A guide RNA that is complementary to a foreign DNA sequence makes it possible for Cas9 to unwind the sequence’s DNA helix, create a double-stranded break, and then the repair enzyme puts it back together by placing a desired experimental DNA. The guide RNA sequence is relatively inexpensive to design, efficiency is high, and the protein injectable. Multiple genes can be mutated in one step. This process can be practiced in a cell’s nucleus, in stem cells, in embryos, and extracellularly. It is a gene-editing tool that allows the engineering of the genome. Thermodynamics teaches us that errors will always occur; if one puts in a lot more energy, the likelihood is less, and this is why low-energy systems need self-correction mechanisms. Even then, rare errors can still pass through. CRISPR helps with that for the genomic errors in the natural world. A defective Janus—an error in the Hora-like way of building the system—can be fixed.

Drug discovery, genetic disease prevention, heart diseases, blood conditions, modifying plants5 for a change of properties—tomatoes that are jointless; a working example is the joint where the tomato attaches to the plant that gets a signal to die and let go when a tomato is ripe—and others all become possible. What would take nature’s complex system many many generations, such as through plant breeding, or of living species, can be achieved in one or few generations. An emergent-evolution machine has been born with human intervention. But now it can live on its own. New members of the natural kingdom can be engineered.

The second technology is that of machine learning now evolving toward an artificial intelligence. As electronic devices have become smaller and smaller, and new architectures—including those with rudimentary self-test and self-repair—evolved, with ever-higher densities and integration levels, we now have supercomputing resources local at our desk as well as in the cloud. This enormous data sorting and manipulating power is now sufficient for the programmed algorithms to discover patterns, find connections between them, and also launch other tasks that ask questions to help test the validity of inferences and probe for more data where enough is not known. The human view of information is of it representing compact associations. While the picture of a dog contains a lot of data in the pixels, and one can even associate a scientific information measure—a negentropy of sorts—to it, the information in a human context is that these associations when viewing the picture will be of it being a dog, more specifically a golden retriever that looks well looked after, but also that golden retrievers are prone to cancer due to selective breeding, and so on. Quite a bit of this information can be associated with the dog as nuggets of wisdom. Even that dogs and cats do not always get along together, or that dogs became domesticated—this too by selective breeding—during the human as hunter-gatherer phase. As we go farther and farther away from the dog as a pattern—dogs evolved from wolves—a physical machine’s ability gets weaker and weaker unless the connection of very different domains of information can be put together as knowledge. For a human this is less of a problem. If this factual information of wolf-dog existed as a content in the machine, it is fine, but if one were interested in finding this connection, it will require asking questions, genetic tracing, pattern matching, and taking other steps before the connection will be established. The human learning and knowledge creation happens through hypotheses, looking at results of experiments and debates before ultimately a consensus appears. This capability, because machines can ask and research for information, is now within the reach of artificial intelligence, although connecting two what were previously separate domains—space and time as in relativity—is certainly not, at least not yet. So, when one reads reports of artificial intelligence performing a preliminary assessment of diseases, helping design molecules, and building experiments for the designer molecules, these are fairly complex pattern-matching tasks where data and information is being connected.

With quantum computing also making enormous progress, there is another range of possibilities of powerful import. Because of the exceptional and different computing capability that quantum computation achieves (entanglement is subsumed), making connections that exist, but are not apparent classically, can be potentially made latent quantum-mechanically. This potentially implies that the nature of associations and connections that the human sees, as Einstein saw in relativity, and classical machine learning only sees if it has already seen it, may be unraveled by the quantum computing machinery since they exist in the entangled data and are not classically manifest.

We can now connect our thermodynamic thoughts to this discussion.

CRISPR as a gene-modification tool came about by replacing nature’s randomized trials process with human intervention, because scientists knew how bacteria’s immune response fights a foreign viral DNA. CRISPR is this producing of two strands of short RNA (the guide RNA), that then go and form a complex with the Cas9 enzyme that then targets and cuts out and thus disables the viral DNA. Because of the way Cas9 binds to DNAs, it can distinguish between bacterial DNA and viral DNA. So, in this ability lies the memory that can continue to correct future threats. The threats are the errors. From the domain of bacterial viral infection, we have now made connections to the vast domain of natural processes that depend on the genome because a process that nature had shown as being a stable has now been connected to other places, which nature may have discovered, but perhaps not evolved to in the Wallace-Darwin sense. Both the energy constraint and the error correction and stability constraint of thermodynamics were satisfied in the physical practice of CRISPR in the natural world. Human beings can now apply this to multitudes of places where nature does not because it has either not figured it out or because it figured out that it was inappropriate.

Drug discovery, genetic disease prevention, heart diseases, blood conditions, modifying plants for a change of properties—tomatoes that are jointless; a working example is the joint where the tomato attaches to the plant that gets a signal to die and let go when a tomato is ripe—and others all become possible with CRISPR

Now look at the evolution of computing to artificial intelligence in our physical technology and its capabilities as well as shortcomings. Physical electronics devices getting to smaller-length scales means that much that happens at the quantum scale becomes important. Tunneling is one such phenomena, and electrons tunneling because voltages exist, not because data is being manipulated, is just a production of heat and not doing anything useful. To get low errors in these physical systems—without yet a general ability to correct errors at the individual step stage—means that one cannot reduce the energy being employed to manipulate the data. So, the amount of energy that is consumed by a biological complex system to make a useful transformation is many orders of magnitude lower than that of the physical complex system. The human brain is like a 20 W bulb in its energy consumption. The physical systems used in the artificial intelligence world are hundreds of times more power consuming if sitting on a desktop and another factor of hundreds more if resident in a data center. So, this artificial intelligence capability has arrived with an enormous increase in power consumption because of the thermodynamics of the way the complex system is implemented.

Human thinking and the human brain is a frontier whose little pieces we may understand, but not the complex assembly that makes it such a marvel. So, the tale of “Blind men and the elephant” should keep serving us as a caution. But there are a number of characteristics that scientists—psychologists, behaviorists, neuroscientists—do seem to agree with through their multifaceted experiments. The behaviorist Daniel Kahneman6 classifies human thinking as fast and slow. Fast is a System 1 thinking that is fast, heuristic, instinctive, and emotional. In making quick judgments, one maps other contexts to the problem one is faced with. This dependence on heuristics causes bias, and therefore systematic errors. Slow is a System 2 thinking that is deliberative and analytic, hence slow. The human brain, and those of only a few other mammals, is capable of imagining various pasts and future “worlds” to develop situational inferences and responses. The neuroscientist Robert Sapolsky7 discusses the origin of aggression and gratification in terms of the events in the brain. I associate these mostly with the fast since they tend to be instinctive. Functionally, the brain can be partitioned into three parts. Layer 1—common to the natural kingdom—is the inner core that is quite autonomic that keeps the body on an even keel by regulating. Layer 2, more recent in evolution, expanded in mammals and is proportionally the most advanced in humans. Layer 3—the neocortex, the upper surface—is the youngest in development (a few hundred million years8), relatively very recent, with cognition, abstraction, contemplation, sensory processing as its fortes. Layer 1 also works under orders from Layer 2 through the hypothalamus. Layer 3 can send signals to Layer 2 to then direct Layer 1. The amygdala is a limbic structure—an interlayer—below the cortex. Aggression is mediated by the amygdala, the frontal cortex, and the cortical dopamine system. The dopaminergic system—dopamine generation in various parts of the brain—is activated in anticipation of reward, so this complex system is for this particular aspect a trainable system—a Pavlovian example—where learning can be introduced by reinforcing and inhibitive training experiences. A profound example of the failure of this machinery was Ulrike Meinhof, of the Baader-Meinhof Red Army Faction that was formed in 1968 in Germany. Earlier, as a journalist, she had had a brain tumor removed in 1962. Her autopsy in 1976 showed a tumor and surgical scar tissue impinging on the amygdala, where social contexts, anxieties, ambiguities, and so on are tackled to form that response of aggression. Many of the proclivities of a human depend on this Layer 3 to Layer 2 machinery and the Januses embedded in them. Pernicious errors, if not corrected, the style of thinking and decision-making as in intelligence, matters profoundly. This is true for nature and will be true for artificial intelligence.

Nanotechnology has now nearly eliminated the gulf between the physical and the biological domain. The last decades of the twentieth century and those that followed brought about numerous nanotechnology tools that made a more fundamental understanding of complex biological processes possible

With this background of the current state and related discussion, one should now turn to look at the future. Technology, when used judiciously and for the greater good of the societal collective, has been a unique human contribution to nature. The technological capabilities over the last nearly two hundred years, since the invention of the steam engine, have been compounding at an incredible rate.

In this milieu, in recent decades, nanotechnology has now nearly eliminated the gulf between the physical and the biological domain. The last decades of the twentieth century and those that followed brought about numerous nanotechnology tools that made a more fundamental understanding of complex biological processes possible. In turn, this has led to the incredible manipulation capabilities that now exist that sidestep the natural ways of evolution. Artificial intelligence brings in brain-like cognitive capability, except that this capability can access incredibly more data than any human can keep and often it is in the hands of corporations.

The story of technology tells us that the nineteenth-century Industrial Revolution ocurred in mechanics, which served to automate physical labor. I am going to call this new nanotechnology-enabled change with the merging of the biological and physical an existential revolution since it impinges directly on us as beings.

This existential revolution and the changes and disruption it is likely to cause are of a kind and at such a scale that the traditional long delay of society exercising control—it takes many decades, even societal upheavals such as those arising in Marxism following the Industrial Revolution—will just not work. Much care, foresight, and judgment is needed, otherwise we will witness consequences far worse than the loss of species that we are currently witnessing due to the unbridled expansion of humanity and the need for resources for his expansion.

There are plenty of illustrations pointing toward this, some more deeply connected than others.

The story of technology tells us that the nineteenth-century Industrial Revolution occurred in mechanics, which served to automate physical labor. I am going to call this new nanotechnology-enabled change with the merging of the biological and physical an existential revolution since it impinges directly on us as beings

Between 1965 and 2015, the primary energy supply of the planet has increased from 40 PWh to 135 PWh (petawatt hour as the unit) over a whole year. This is an increase in excess of a factor of three over this fifty-year period with the predominant source of energy being oil, coal and gas. During this same period, the yearly electrical energy movement in the US alone went from about 8 PWh to nearly 42 PWh, a five-fold increase. The United States alone now consumes in the form of electric energy the total energy that the entire world consumed about fifty years ago. US consumption is a quarter of the energy consumption of the world even though its population is less than one twentieth. Much of this energy-consumption increase has come from the proliferation of computation and communication. Each of that smartphone’s functioning requires a semi-refrigerator of network and switching apparatus. Each of the web searches or advertising-streamed courtesy of machine-learning algorithms is another semi-refrigerator of computational resources. Global warming is at its heart a problem arising in this electricity consumption, which in turn comes from this information edifice and its thermodynamic origins.

As the cognitive aspect of the machine ability improves, and robotics—autonomous cars being a primary trend currently—proliferates, will people live farther and farther away from work because cars have been transformed into offices on wheels, or will it lead to an efficient usage of cars where cars pick up people and drop off people in set places and so fewer cars are needed because they are being used efficiently and perhaps only exist as a service that is owned by the community or a business? If it is the latter, this is a technological solution around a major problem that has appeared in our society a hundred plus years after the invention of the combustion engine. If it is the former, there is now another increase of energy consumption brought about by technology. Existentially insidious is the continuing expansion of the “medium is the message” because technology companies with the computational platforms and the reach are really media companies pushing advertising, thoughts, and lifestyles leveraging their access to the individual’s societal interaction stream.

In the biological disruption, humans have not quite objected to genetically modified agriculture, which is a rudimentary genome modification. Improved nutritional value, pest and stress resistance, and other properties have been welcomed, particularly in countries such as the US and in the Third World. Not so well understood is the crossbreeding and introduction of foreign transgenes into nature, nor is the effect of such artificial constructs that did not arise through the evolutionary process of nature on other natural species. Increased resistance also goes together with invasiveness, leading to reduced diversity. Bt-corn fertilizes other crops and becomes a vector for cross-contamination of genes in the Mandeleevian style. Bt-corn also affects other insects, a prominent example being of Monarch butterflies. So, just like the antibiotics-resistant bacteria that we now face, especially in the hospitals of the Third World with tuberculosis being the most insidious, and the major hospitals of the US, we will have deleterious consequences from the new engineering of biology. We have few capabilities for visualizing these possible threats, so this subject is worth dwelling on.

As the cognitive aspect of the machine ability improves, and robotics—autonomous cars being a primary trend currently— proliferates, will people live farther and farther away from work because cars have been transformed into offices on wheels, or will it lead to an efficient usage of cars

What does the introduction of a precisely programmed artificial change into the natural world do? That is, a world in which evolution takes place via a selection based on small and inherited variations that increase the ability to compete, survive, and reproduce by natural random interactions of which only specific ones will continue. It will lead to further continuation of the natural evolution process, but in which a nonnatural entity has now been introduced. Nature evolves slowly on a long-term time scale. This evolving minimizes harmful characteristics all the way from the basic molecular level to the complex system level at each of the steps of the assembling. While the artificial change has a specific characteristic desired by the human, it may not and will not for many instances have the characteristics that nature would have chosen because of the optimization inherent in the natural evolution process. The compression of the time scale and the introduction of specific nonnatural programming means that the chances of errors will be large. We see the deleterious consequences of such errors in systems all the time in our world’s complex systems. An error of placing the wrong person in the hierarchy of an organization leads to the failures of such organizations. An error of placing the wrong part in the complex system, such as in Hora’s watch, will stop its working. A specific function may be achieved through the deliberate making of a choice, but a global function may be lost.

CRISPR makes the consequences of this genetic modification of the natural world much more profound, because it can make programmed change over multiple genes possible, not just in crops but in all the different inhabitants—living and nonliving. Multiple changes affected simultaneously compounds the risks. A hamburger-sized tomato that has flavor, takes long to rot, and grows on a jointless plant may be very desirable to McDonalds, the farmer, and the consumer, but it is extremely risky with the risks not really even ascertainable about what the jumping of genes across species could possibly lead to. This is precisely what the thermodynamic constraints on natures’ processes—energies, error rates, error-correcting, generational mutations, and self-selection—has mitigated for billions of years.

A short step from this is the potential for programming traits of our own offspring. Who does not want brighter and more beautiful children? A hundred years ago, eugenics was enormously popular in the countries of the West—John Maynard Keynes, Teddy Roosevelt, Bertrand Russell, Bernard Shaw, Winston Churchill were all adherents—and it did not take long for this path’s culmination in Adolf Hitler. Among the pockets of high incidence of Asperger’s syndrome is the Silicon Valley, where many of the high-technology practitioners—a group with specific and similar traits and thus reduced diversity—reside. That autism exists as a problem here should not be a cause for surprise. Genes serve multitudes of purposes, and various traits arise in their coexistence. This requires diversity from which the multitudes of traits—of disease, disposition, and others—that humans acquire. Program this, and we really cannot tell what deleterious outcomes will arise and one will not see these for generations.

Another scaling of this approach is the putting together of CRISPR with artificial intelligence as an emergent-evolution fusion. With enough data, one could ask for a certain set of characteristics in this group, the algorithms design CRISPR experiments to achieve it, and behold, we have engineered a new human, or even a new species.

It is the challenge to society to bring about a civilized order to all this. Society needs to find ways to function so that self-repair becomes possible at each stage of the complex system building. Neither top-down nor bottom-up suffice, the repairing must exist at each stage in how society functions and also in how the technology works

As Leo Rosten once said: “When you don’t know where a road leads, it sure as hell will take you there.” This is the existential paradox.

So, I return to the dilemma outlined at beginning of this paper. In the first half of the twentieth century, when we learned to create chemicals for specific purposes with similar motives, for example, pesticides, even as agriculture output increased—a desirable outcome—we also faced Rachel Carson’s Silent Spring.9 With time, we found ways to mitigate it, but this problem still festers at low intensity.

It is the challenge to society to bring about a civilized order to all this. Society needs to find ways to function so that self-repair becomes possible at each stage of the complex system building. Neither top-down nor bottom-up suffice, the repairing must exist at each stage in how society functions and also in how the technology works.

This complex world that we now have has this existential dilemma in the emergent-evolutionary fusion.

We have moved beyond Descartes and Ryle, and the great phenomenologists.

The ghost exists in this machinery. Ghosts can be angelic and ghosts can be demonic. Angels will benefit us tremendously, just as transportation has for mobility, drugs have for health, communication has for our friendships and family, and computing has in helping build the marvels and technologies that we employ every day. Humans must understand and shape the technology. If we can build such systems with clear and well-thought out understanding of what is not acceptable as a human effort, keep notions of relevance and provenance, and make the systems reliable, then the world’s education, health, agriculture, finance, and all the other domains that are essential to being civilized humans as citizens of this planet can all be integrated together for the greater good of all.

But, if not dealt with judiciously and at the planet scale with nature’s kingdom at its heart, the human story could very well follow the memorable line from Arthur Clarke’s 2010: Odyssey Twobook: “The phantom was gone; only the motes of dancing dust were left, resuming their random patterns in the air.”

Comments on this publication