A little-known mathematical formula is now being used in AI systems to assist in a wide range of problems, including predictions on the way that the pandemic is spreading, as well as other uses on our Smartphones. This article explains why.

When I first started working in AI about 35 years ago, I was intellectually dazzled with the application of one technique. It was called Bayesian Inference – based upon a mathematical formula conceived by a clergyman named Thomas Bayes in the 18th Century. It became known as Bayes Theorem. It was being used very successfully in expert systems – a successful branch of AI in the 1980s. What struck me about this technique was the way that a mathematical formula could mimic, and improve upon in many cases, human experts in their decision-making processes.

As a graduate mathematician, I was familiar with and had used, Bayes Theorem, in other types of problem-solving. But its use in emulating human experts was new to me. I found it impressive because human experts would be unlikely to have the knowledge of the mathematics underlying Bayes Theorem. Today, it’s ubiquitous in the world of AI. We all use it on our smartphones without realizing it. In machine learning systems today, Bayesian inference is more prominent than ever.

Why? The answer is that given the right conditions, Bayesian Inference seemed to model human expertise exceptionally well. How could that be? The answer is that experts subconsciously learn to assign reasonably correct weightings to evidence to enable them to do intuitive calculations in problem-solving. But Bayes formula can do such calculations precisely.

Bayes Theorem – how it works

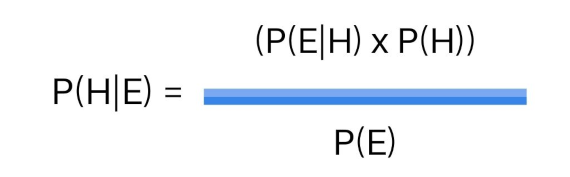

To understand how it works, we use symbolic notation to describe the problem in Bayes as follows:

First, the problem should be formulated in terms of one, or more hypotheses. A hypothesis is simply a proposition whose truth is unknown. For example, a hypothesis could be a patient having Covid. If a person is selected at random then the answer to this question would be unknown. But experts would have an initial idea of how likely that is to be: we call this the prior probability and it is the measure of the likelihood of an event happening without any further information being known. A probability represents the chance of an event happening as a numerical value between 0 and 1. A good estimate of a prior probability can be obtained from an expert or from estimating it´s value from statistical averages. In the case of Covid, we could examine the number of infections compared to the number in the population. We write P(H) to represent the prior probability of H.

The next step is to use evidence that will be used to update our prior probability of H. We normally refer to evidence by E – written as P(E). Bayes realized that by combining the prior probability of a hypothesis and using the evidence from current observations, he could calculate an updated probability reflecting the current situation – known as a posterior probability. Once we have the prior probability for a hypothesis we then need to describe how much the knowledge of an item of evidence would change the probability of a hypothesis. We write P(E/H) as meaning the probability of the evidence E being true if the hypothesis H is true. This is saying that the probability of E is conditional on H. In essence, Bayes conceived a formula for updating the probability of a hypothesis when new evidence is received. If the new evidence is consistent with the hypothesis, then the probability of the hypothesis increases, otherwise, it could decrease.

The Bayes formula, written in mathematical notation, is

To use this formula, we would get values for the right-hand side, plug them into the formula, from which an updated value of P(H/E) could be found. In other words, an updated value of the chance of the hypothesis happening given that we have observed the evidence E. In practice, all the number crunching is done by computer. However, an example is shown below to illustrate how Bayesian inference could be used to update predictions for Covid infections based upon the outcome of a Covid test. This is a simplification and is not based on real data. It´s purpose is to show how mathematics is used in the formula to update probabilities.

Example

Suppose it is known in a certain hospital that there is a 1 in a 1000 chance that a patient has Covid. Suppose it is also known that a test is used that is 99% accurate? What is the probability that a patient who tests positive has Covid?

To find the solution, we need to find values for each part of the right-hand side. i.e., P(H), P(E/H), and P(E).

P(H) = 1/1000 = 0.001 (Since there is a 1% chance of having Covid unconditionally)

P(E/H) = 0.99 this is given since 99% of the time Covid patients will test positive.

Now P(E) = P(E/H) x P(H) + P(E/~H) x P(~H), where ~H means not having Covid.

So, P(~H) = 99/100 = 0.999.

And, P(E/~H) = 0.01

Hence, P(H/E) = 0.99 x 0.001/ (0.001 x 0.99 + 0.999 x 0.01) = 0.09.

This means that the patient would have a 9% chance of having Covid given that he or she tested positive. This may come as a surprise to some people but remember there is a 1/1000 chance of having Covid, so in a sample of 1000, we would expect 1 to have it. Of the remaining 999 people who don’t have it, the test will fail about once in a hundred and that means we would expect about 10 of them to fail. We are left with 1 with (Covid) and about 10 without (covid), giving a propability of about 1 in 10– which is very close the Bayes formula result of 0.09.

Bayesian Controversy

The use of Bayesian inference has also caused controversy at times – particularly in forensic science. For example, a man was convicted of rape in the UK in 1990 and sentenced to 16 years – partly on the basis of DNA evidence. An expert witness for the prosecution said that the chance that the DNA could be the same as another person was just one in 3 million.

But the man appealed against this sentence. An expert claimed that there was a flaw in the reasoning because this evidence was mixing up two questions: first, how likely would it be that a person’s DNA matched the DNA in the sample, given that they were innocent; and second, how likely would they be to be innocent, if their DNA matched that of the sample? Although there was only a 1 in 3 million chance of matching the DNA, the total population was assumed to be about 60 million. This means that 20 people would be expected to have a matching DNA and one of those would be guilty. Hence, if one is selected at random, then there is a 19 out of 20 chance that an innocent person is selected – 95%. This does seem to be very high and has been known as the “prosecutor’s fallacy”. However, DNA evidence alone would not be enough to secure a conviction because other evidence would reduce this probability. For example, if it was known that no other person of the 20 could have been in that area at the same time as the victim was raped then applying that evidence to Bayes would increase the likelihood of the accused being guilty. Bayesian inference is a probability updating process and each new piece of evidence can corroborate the likelihood of guilt.

Assumptions for using Bayesian inference

Applying Bayesian inference in very large systems can sometimes be difficult because the items of evidence contributing to a hypothesis have to independent of each other. This means that two items of evidence used cannot affect the other. This could be a tall order when several pieces of evidence are interconnected with several hypothesis.

Naive Bayesian Learning Systems

One of the problems with Bayesian inference is that, for large systems, there a large amounts of hypotheses and evidence there could lead to a combinatorics explosion in the number of interconnected hypotheses and evidence. A great deal of hand coding could be avoided by applying machine learning to data. A naive Bayesian learning system is a classification neural network that assumes the predictors of evidence are independent in the same way as they are in using Bayes Theorem. It’s an approach that draws upon learning from experience, combined with the application of Bayes Theorem

It uses supervised learning examples. i.e., examples of data whose output is given to the learning algorithm, and works better with large datasets.. By transforming the data into a statistical frequency table it can learn posterior values described earlier for each class. It then ranks the predictor (hypotheses) with the highest value in order to make a prediction. Naive Bayes works quite well – even when the independence criteria is violated. This is why it´s called naive Bayes learning. Naive Bayesian learning models are being used behind the scenes in many applications that all of us use daily on our Smartphones. For example, naive Bayesian learning is being used in Spam filtering on email systems extensively and energy management systems on smartphones, and many other uses.

Conclusions

Bayesian inference has become a successful AI technique both as a computational system and as one derived from a learning model. It is also well suited to a wide range of application domains if certain conditions prevail. Bayesian inference is likely to play a part in many AI systems developed in the future.

Comments on this publication